Wireguard: The Road warrior

Tunnels are laid, sites are connected. Now, it's your time to roam! Become the road warrior with Wireguard.

Expand your ElasticSearch cluster online without any downtime.

New day, new task as an operation guy. This time I have been assigned a task to expand one of our production ElasticSearch clusters. The target; perform it without the downtime of our application nor the cluster. Simple enough, but you never know what can happen down the road, so let's try to prepare the expansion and see what we can expect of this activity.

At the moment our current cluster consist of 12 old nodes which we are going to decommission and replace with a shiny brand new 6 nodes, this should give us more breathing space for the future.

Thanks to the flexibility of ElasticSearch, the expansion should be quite easy and almost fully handled by ElasticSearch itself due to its scalability, the high level steps should look as follow, we will get into the details of each step later:

I won't go into much details in this part as your ElasticSearch installation might differ, but you should make sure that the following is set properly for the new nodes:

No issue on our side as the nodes are provisioned via Ansible playbook, we can proceed further onto the next step.

Usually you don't have to do this step, but my plan is to join the nodes into the cluster all at once, but I don't want ElasticSearch to assign the shards to the nodes straight away as I want to check, if the nodes are fully operational, hence this step. Shard allocation can be disabled cluster wide via following API call:

root@es-master-1:~# curl -X PUT "localhost:9200/_cluster/settings" \

> -H 'Content-Type: application/json' \

> -d'{

> "persistent": {

> "cluster.routing.allocation.enable": "none"

> }

> }'

{"acknowledged":true,"persistent":{"cluster":{"routing":{"allocation":{"enable":"none"}}}},"transient":{}}

Once you disable the shard allocation we can move on and startup new ElasticSearch instances.

Startup your nodes via whatever method is most suitable for your ElasticSearch deployment; check, if the nodes have joined the cluster without any issues.

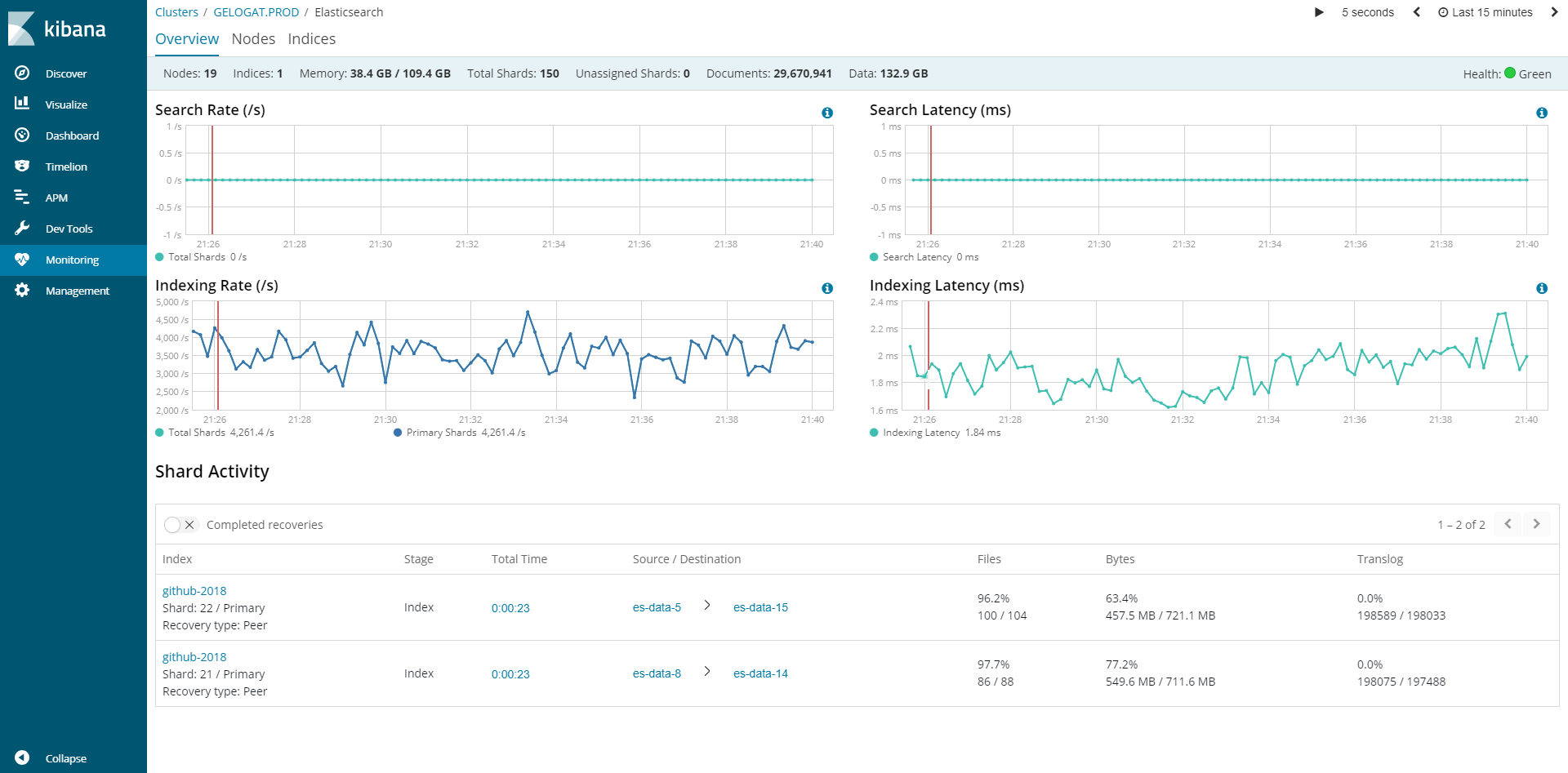

Time to enable shard allocation and wait until the cluster rebalances and some shards get moved to our new data nodes.

root@es-master-1:/app/products/elasticsearch/logs# curl -X PUT "localhost:9200/_cluster/settings" -H 'Content-Type: application/json' -d'

> {

> "persistent": {

> "cluster.routing.allocation.enable": null

> }

> }

> '

{"acknowledged":true,"persistent":{},"transient":{}}

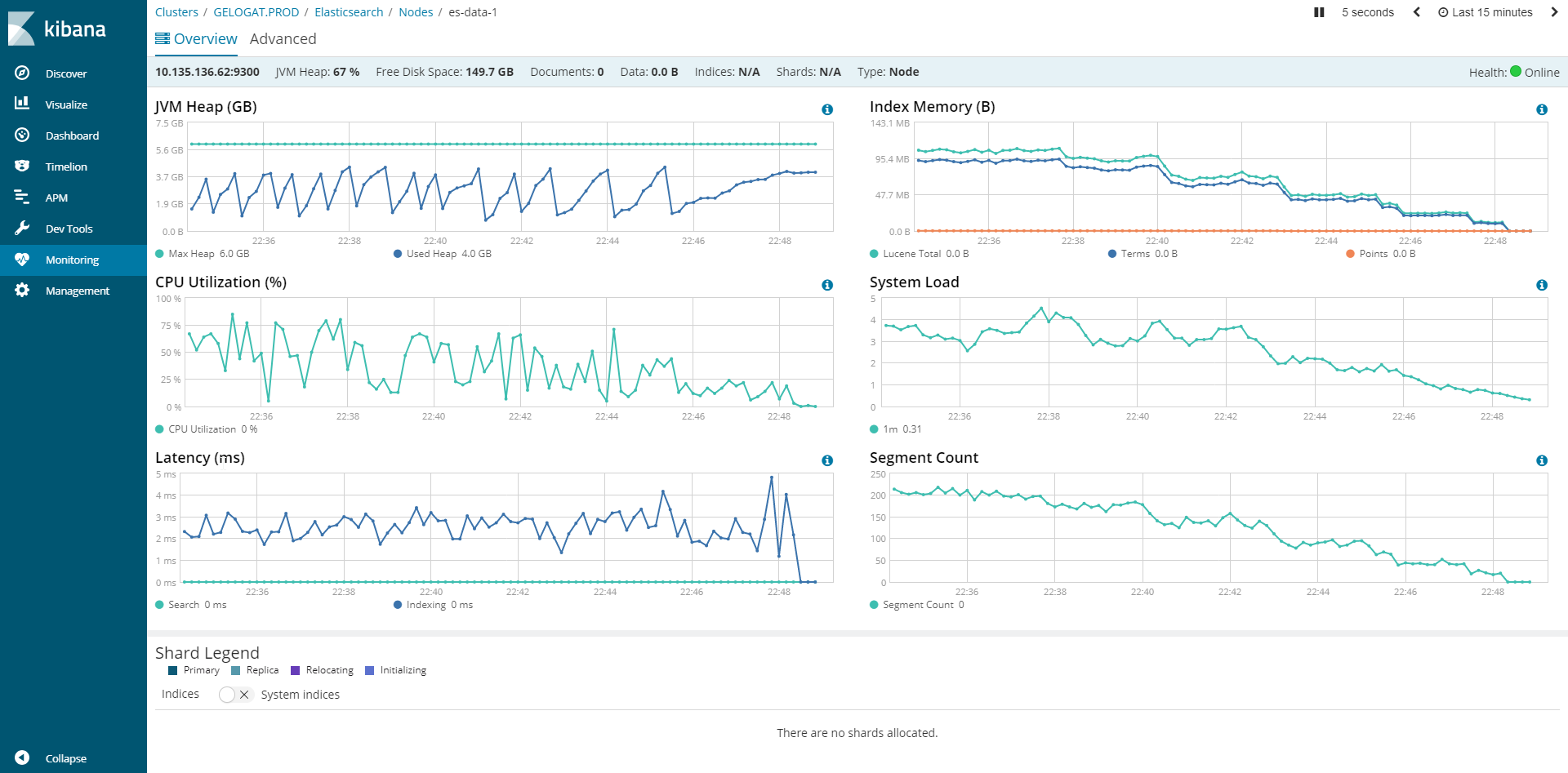

Now the fun begins, the shards have been rebelanced and we can start to decommission our old nodes. First we have to make sure that no shards are allocated to the node that we are about to decommission. To do that we have to move all shards away from it via following command:

root@es-master-1:/app/products/elasticsearch/logs# curl -X PUT "localhost:9200/_cluster/settings" -H 'Content-Type: application/json' -d'

> {

> "transient" : {

> "cluster.routing.allocation.exclude._ip" : "10.135.136.62"

> }

> }

> '

{"acknowledged":true,"persistent":{},"transient":{"cluster":{"routing":{"allocation":{"exclude":{"_ip":"10.135.136.62"}}}}}}

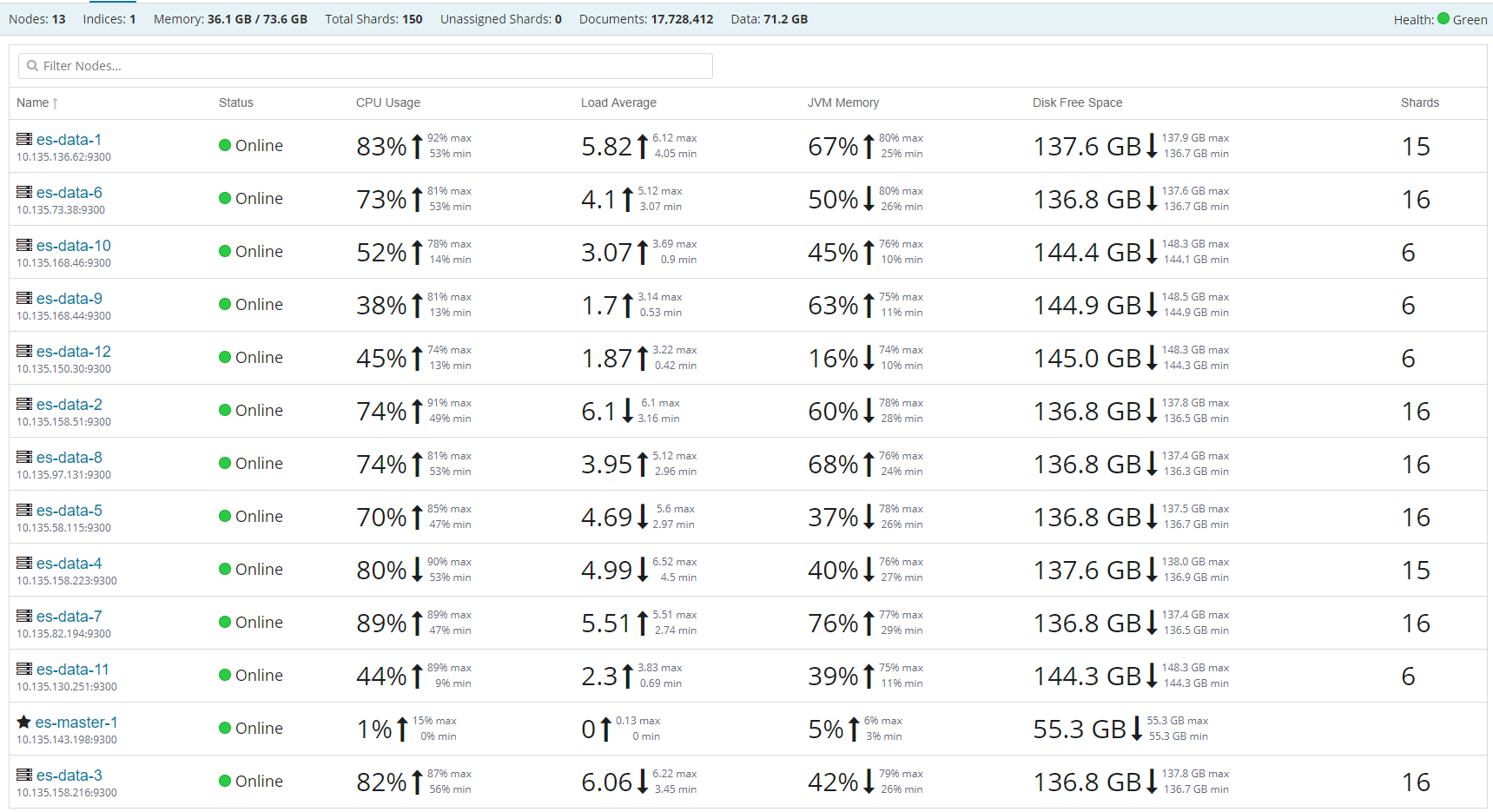

As you can see ElasticSearch is moving away all the primary shards from the node, once there are no shards on the node, we can switch off the node to decommission it from the cluster.

Now that all the shards have been moved out of es-data-1 node we can switch it off and remove it from the cluster, rinse and repeat until you are left with only the new nodes in your cluster.

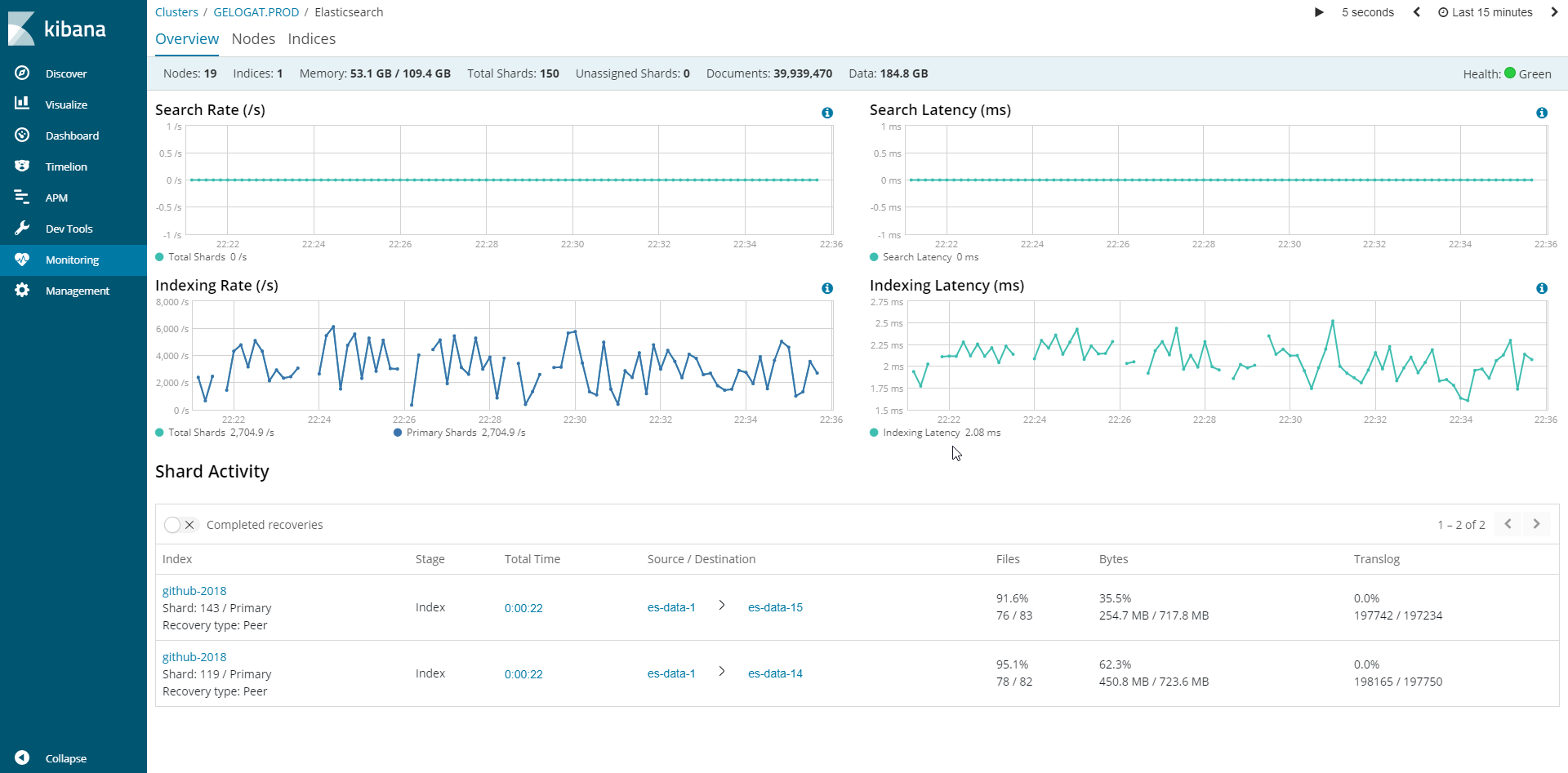

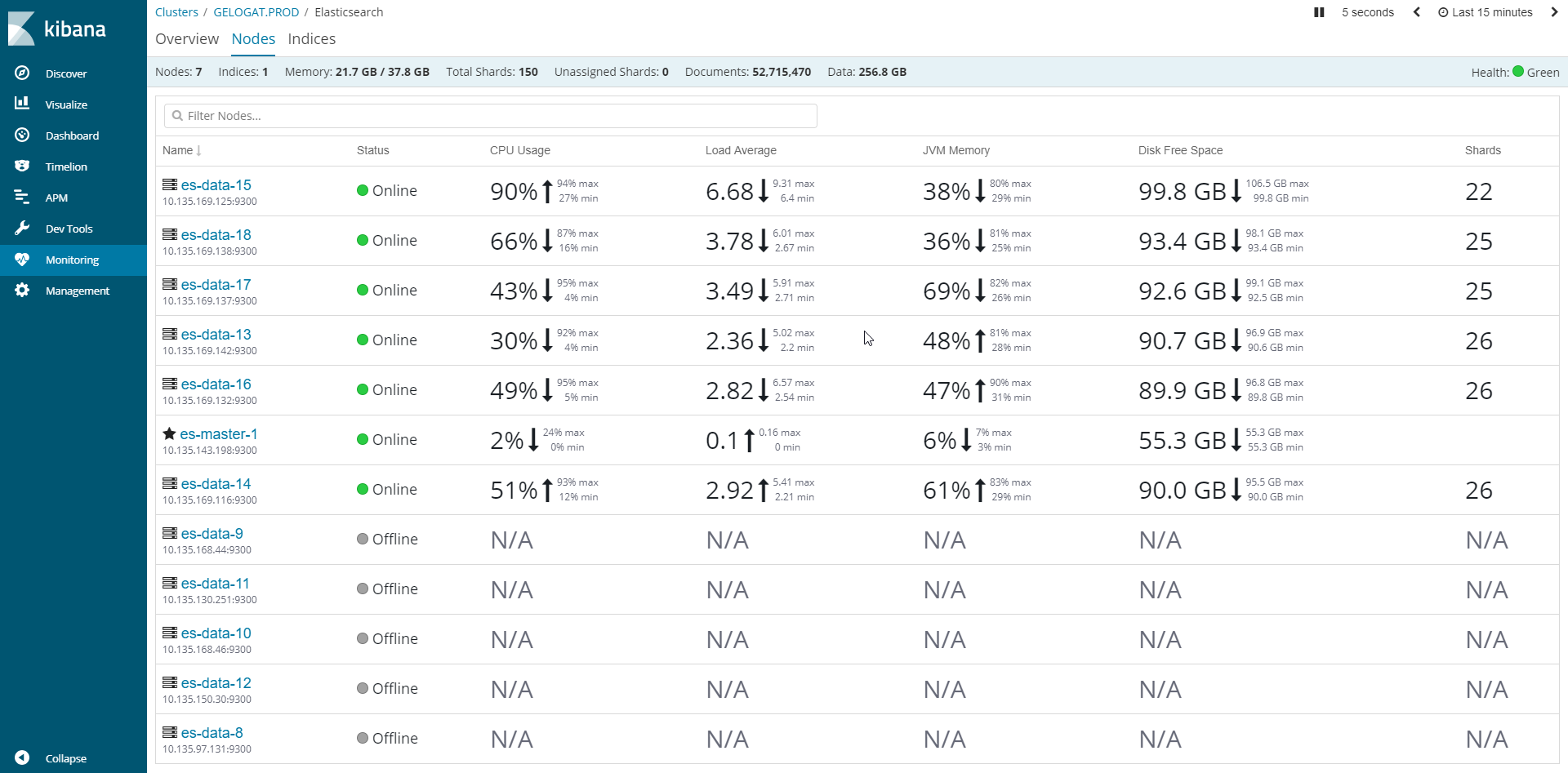

Once I've decommissioned all the old nodes I ended up with something like this:

Fully functional cluster running on brand new 6 nodes, no downtime needed at all, just make sure that you are of course not performing this during the peak time. :)